- November 27, 2016

- Posted by: papasiddhi

- Category: Cloud Computing

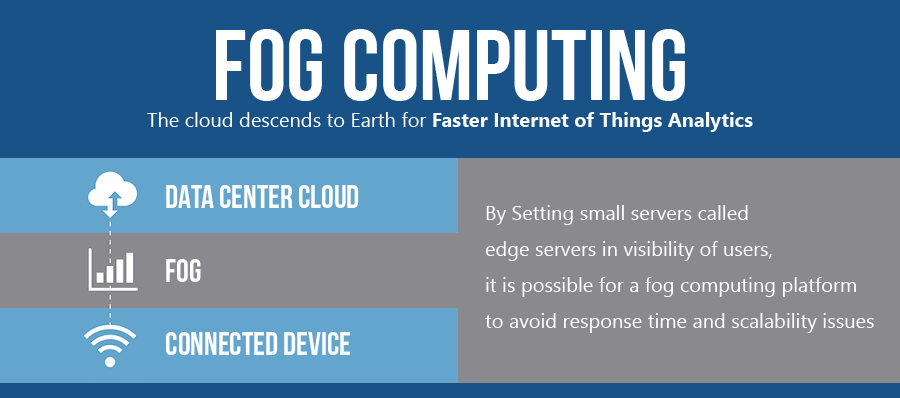

Fog computing is a term generated by Cisco that refers to extending cloud computing to the peak of an enterprise’s network. Also acknowledged as Edge Computing or fogging, fog computing simplifies the operation of compute, storage and networking services betwixt end devices and cloud computing data centers.

Cisco made known its fog computing vision in January 2014 as a path of bringing cloud computing capabilities to the edge of the network and as a conclusion, handy to the rapidly growing number of allied devices and applications that deplete cloud services and generate increasingly massive chunks of data.

Fog computing, also known as fog networking, is a separate computing infrastructure in which computing assets and application services are shared in the most sensible, efficient place at any fleck along the continuance from the info source to the cloud. The goal of fog computing is to promote efficiency and diminish the amount of data that needs to be carried to the cloud for data processing, scrutiny and storage. This is often done for efficiency logic’s, but it may also be carried out for security and compliance logic’s.

The term fog computing is also assigned to as “edge computing,” which indeed means that fairly than hosting and working from a centralized cloud, fog systems operate on network ends. That concentration means that data can be refined locally in smart devices rather than being sent to the cloud for preparing. It’s one access to dealing with the Internet of Things (IoT).

Fog computing, like many IT developments, increase out of the need to address a couple of winding concerns: being adept to act in real time to approaching data and working in reach the limits of available bandwidth. Today’s sensors are achieving 2 exabytes of data. It’s too much info to send to the cloud. There’s not plentiful bandwidth, and it costs too much money. Fog computing places some of actions and assets at the edge of the cloud, rather than establishing channels for cloud storage and usage, it diminishes the requirement for bandwidth by not sending every bit of information over cloud channels, and rather than assembling.

It at un-doubting ingress points. By using this kind of distributed strategy, we can lower costs and raise efficiencies.

Fog Computing extends the cloud computing paradigm to the edge of the network to address applications and services that do not fit the paradigm of the cloud including:

- Applications that desire very low and certain latency

- Geographically shared applications

- quick mobile applications

- Large-scale shared control systems (smart grid, connected rail, smart traffic light systems)

Fog, Cloud and IoT together

The IoT commitments to bring the convenience of cloud computing to an earthly level, allowing every home, vehicle, and workplace with smart, Internet-connected devices. But as dependence on our newly allied devices boost along with the benefits and uses of an advancing technology, the accuracy of the gateways that make the IoT a functional reality must increase and make uptime a nearby guarantee.

Using robust edge gateways would build up the IoT infrastructure by consuming the impact of procedure work before passing it to the cloud. Fog computing can meet requirements for stable low latency responses by processing at the edge and can contract with high traffic amount by using smart filtrate and selective conveyance. In this way, smart edge gateways can either handle or brilliantly alter the millions of tasks coming from the myriad sensors and monitors of the IoT, transferring only summary and omission data to the cloud proper.

The accomplishment of fog computing pins directly on: the resilience of those smart gateways operating countless tasks on an internet brimming with IoT devices. IT resilience will be a necessity for the business continuity of IoT operations, with excess, security, controlling of power and cooling and failure solutions in place to assure maximum up-time. According to Gartner, every hour of interim can cost an organization up to $300,000. Speed of formation, cost-effective scalability, and comfort of management with limited resources are also chief interests.

This developmental shift from the cloud to the fog forms complete sense. The original cloud blast began when mobile devices like smartphones and tablets were becoming all an obsession. Back then, these devices were weak on computing power, and mobile networks were both sluggish and unreliable. Therefore, it made entire sense to use a hub-and-spoke cloud architecture for all communications.

But now that most of us are surrounded in reliable 4G technologies, and mobile devices now conflict many PCs in terms of computational power, it makes sense to move from a hub-and-spoke model to one that simulate a mesh or edge computing data architecture. Doing so ejects bandwidth bottlenecks and latency issues that will undoubtedly injure the IoT movement in the long run.

So if you thought that cloud computing was the apex of infrastructure layouts for the ascertainable future, think again. If we’re talking billions of devices and imperative communication, current cloud models won’t be able to haft the load. Fortunately, advances in mobile preparing power and wireless bandwidth have confessed many to design a far more able architecture that brings us out of the clouds and into the fog.

1 Comment

Leave a Reply

You must be logged in to post a comment.

I like this site because so much useful stuff on here : D.